TL;DR

Conversations at CES 2026 reflected a shift from AI experimentation to deploying it for real needs. Enterprises are addressing governance, accountability, and risk as current operational requirements rather than future considerations.

Hollywood studios and the creator economy raised concerns about ownership and misuse of content. Talent-focused organizations raised concerns about the uncredited use of likeness, especially with the proliferation of generative AI tools.

Enterprise AI teams emphasized that AI cannot be scaled safely without governance controls that ensure AI-generated content and decisions are identifiable, auditable, and easily integratable with existing security and risk frameworks.

Regulatory pressure from laws such as the California AI Transparency Act and the EU AI Act, combined with the public’s need for media authenticity, is accelerating demand for watermarking and durable content credentials to support compliance and transparency.

SAG-AFTRA President, Sean Astin, speaking with Dr. Eric Wengrowski.

The conversations at CES 2026 were significantly different from 2025 in that AI was not just discussed, but deployed. Companies that visited the Steg.AI booth consistently mentioned that AI is no longer viewed as a distant future capability, but rather an operational infrastructure that is being integrated into enterprise workflows, content pipelines, and physical products. As adoption increases, organizations are now shifting their focus to scaling AI deployment ethically through the lens of governance, accountability, and risk management.

Trust was another common topic raised by security, legal, and production leaders who stopped by the booth. As generative AI produces content that is increasingly indistinguishable from authentic media, companies are facing growing challenges related to provenance, intellectual property protection, and regulatory exposure. These leaders emphasized that media authentication and content verification must be addressed as AI is deployed, not treated as secondary controls after the fact.

Hollywood and the Creator Economy: Trust, Rights, and Authenticity at Scale

Conversations with Hollywood studios, media platforms, and creators that visited the Steg.AI booth revealed widespread concern about how generative AI is reshaping media ownership and attribution. Teams described increasing difficulty in proving authorship, protecting talent likeness, and securing sensitive content. Without verifiable provenance, enforcing rights and responding to misuse becomes slow, manual, and legally complex.

From an enterprise security perspective, these discussions reinforced that media authenticity concerns are no longer limited to creative teams. It is becoming a company-wide governance challenge that intersects with IP protection, fraud prevention, and regulatory compliance. As content workflows become more automated and AI-entrusted, firms are actively looking for mechanisms to identify content origin that seamlessly integrate with AI deployment.

Steg.AI’s robust watermarks establish ownership and help protect against leaks and piracy by allowing organizations to identify the source of unauthorized usage and distribution even after content has been altered or shared across platforms. In addition, Steg.AI provides talent and rights holders the ability to trace the origin of content when their likeness is used online, supporting the enforcement of contractual rights and the use of AI to modify media.

AI Deployment and Scaling: Governance as an Enterprise Control Plane

Enterprise security and AI teams raised concerns about scaling AI without introducing unmanaged or unknown risk. Many described challenges related to unclear ownership of data, lack of accountability both internally and externally for AI-generated outputs, and limited ability to audit decisions once AI systems are deployed. Because AI decision-making is often opaque, teams struggled to attach reasoning to certain outputs, increasing pressure to ensure these systems remain governable.

These conversations made it clear that enterprise AI success depends on more than model accuracy or performance. Companies need mechanisms to monitor, manage, and explain AI behavior across complex environments. For CISOs, AI governance is increasingly viewed as an extension of enterprise security architecture rather than a new, standalone innovation initiative.

Steg.AI supports governance by watermarking content at the point of generation. This ensures that AI-generated and AI-assisted outputs remain identifiable and attributable throughout their lifecycle. By making the origin of content explicit, Steg.AI helps enterprises maintain accountability for AI-generated materials, reduce ambiguity around authorship, and manage legal, reputational, and operational risk associated with large-scale AI adoption.

AI Watermarking, Media Authenticity, and Safety

Generative AI companies raised questions about how to operationalize compliance with emerging regulations such as the California AI Transparency Act and the EU AI Act. These conversations reflected a broader concern that regulatory requirements are advancing faster than most enterprises can adapt their technical systems and workflows.

A recurring issue was the lack of a consistent, industry-wide mechanism to identify whether content is authentic, AI-generated, or AI-modified. Without reliable methods to label and verify content origin, enterprises struggle to meet transparency obligations, respond to audits, and manage downstream legal and reputational risk. This challenge becomes more acute as synthetic media moves rapidly across internal teams, partners, and public platforms.

As generative AI adoption accelerates, AI watermarking, provenance, and verification technologies are increasingly seen as foundational controls for AI safety. These capabilities enable enterprises to establish transparency, maintain accountability, and support trust across complex content ecosystems.

Steg.AI helps organizations meet emerging regulatory requirements by watermarking generative AI outputs in alignment with laws such as the California AI Transparency Act and the EU AI Act. These watermarks are attached to durable content credentials, providing an additional layer of media authenticity beyond surface-level metadata. This allows enterprises to demonstrate compliance, strengthen trust in digital content, and support verifiable provenance across real-world distribution environments.

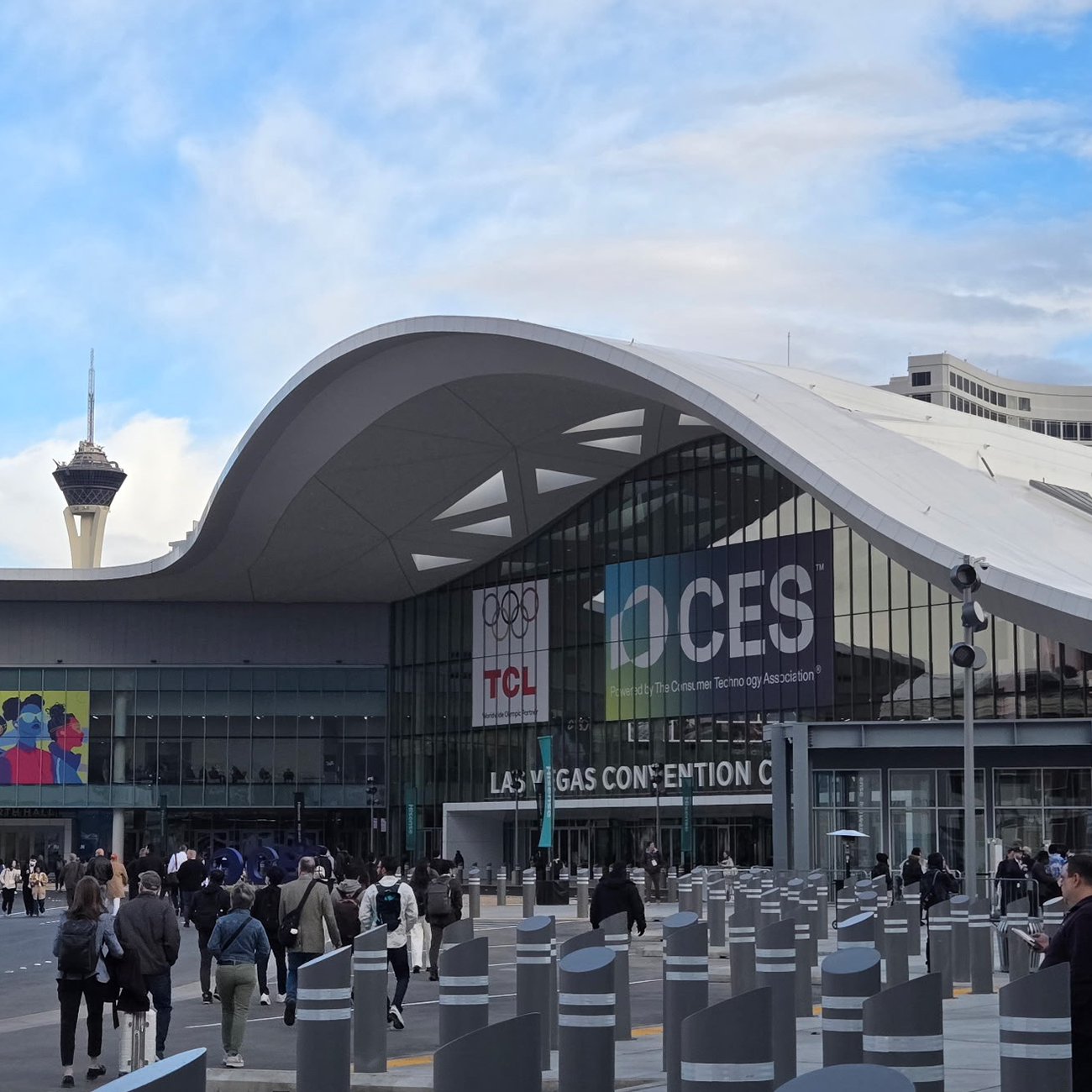

Steg.AI team at CES 2026.