How It Works

Introduction

Deep fakes are synthetically generated media (e.g., audio, images, videos) from machine learning algorithms that replace one person’s likeness (face, voice, etc.) with that of another. For example, this deepfake video of Anderson Cooper and this deepfake video of Morgan Freeman. You may be able to spot inconsistencies in these deepfakes, but overall, they are quite realistic and convincing. And, as these machine learning algorithms continue to improve, the line between real and fake will likely become completely imperceptible to the average person.

While not all deepfakes are malicious, the potential malicious use of deepfake technology has sent alarm bells ringing across the world. For governments and politicians, they worry about the use of public figures to spread misinformation and manipulate elections (e.g., Fake Joe Biden Robocall, and The AI Trickery Shaping India’s 2024 Election; for celebrities, they worry about their likeness being used without their permission; and for the general population, we all worry about knowing what we can trust. With the advent of this deepfake revolution, seeing is no longer believing.

However, just as machine learning technology can be used to generate deepfakes, so too can it be used to detect them; and, more generally, to authenticate digital media as trustworthy and/or authentic. Steg AI offers a unique, state-of-the-art solution to deepfake detection and content authentication. In particular, we use our in-house deep learning technology to invisibly watermark digital assets with unique identifying information that allows us to authenticate that content in the future. Moreover, Steg AI’s products support and enhance the Coalition for Content Provenance and Authenticity (C2PA) standard.

Steg AI’s Invisible Watermarks

Steg AI invisibly watermarks digital content (e.g., images, videos, documents, audio, etc.) using proprietary deep learning technology that we develop in-house. Our watermarks subtly modify the content to embed new information. That information can be anything the user wants; for example, basic information like author and license and complex information like the remote URL of a C2PA content credential.

The figure below shows an example of the watermarking technology being applied to an image. The image on the left is the original and the image on the right is the watermarked version.

With images, the pixels represent the underlying data, and our watermark is baked into those pixels through small perturbations in intensity values. One big positive of this approach is that the watermark is nearly impossible to remove. For example, removing the metadata of the image has no impact on the watermark whatsoever, and the watermark will survive more complex image manipulations like cropping, enveloping, occlusions, blur, color shifts, etc. For this reason, our watermarks compliment secure metadata standards like C2PA content credentials, because even if the C2PA content credential is stripped from the image, we can still point to the remote manifest with the watermark.

For a detailed walk-through of Steg AI watermarking, check out our Technology page.

Using Steg AI Watermarking Technology for Content Authentication

Steg AI watermarks enable deepfake detection and content authentication. The key idea is that all digital media should be watermarked before release. The invisible watermark acts like a stamp of authenticity. Later on, when the provenance of the digital file is called into question, we read the watermark to reveal the origin and authenticate. The process can be broken down into two steps.

Step 1: Apply the watermark.

The figure below outlines a flow we find often works for our customers. In particular, customers simply hand off their digital media to Steg AI to watermark before disseminating. This can be done with the easy to use Steg AI web application, programmatically using the Steg AI API, or we can integrate with your Drive/DAM or create a plugin to integrate into your workflow.

Note that the watermarking process also updates the existing C2PA content credential attached to the file or adds a new one if the content credential is missing. As part of this process, the watermark is linked to the remote C2PA content credential URL. This is important because even if the C2PA content credential is stripped, it can still be recovered through the watermark. Step 2 below provides some examples of C2PA content credential recovery.

Step 2: Authenticate as needed.

The digital media has now been disseminated. This means that everyone with access to the media is now free to edit the media however they want and repost those edited versions. Edits come in many forms and need to be treated differently depending on the customer scenario. Let’s walk through some of the common customer scenarios.

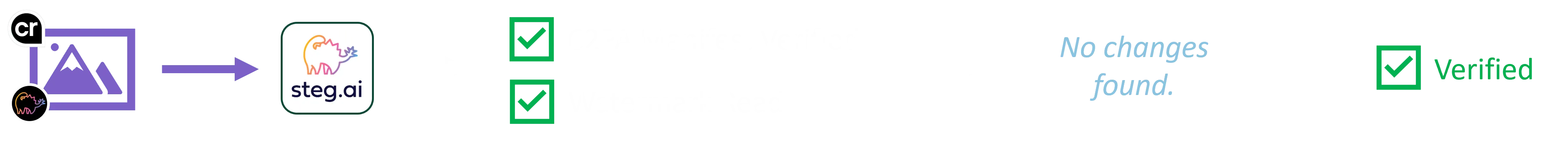

Scenario 1. The figure below outlines the flow of the first scenario. Moving from left to right, a digital asset with both a C2PA content credential and Steg AI watermark has been given to Steg AI to authenticate. The Steg AI system has identified both the C2PA content credential and the watermark, analyzed the asset and found no changes, and returned a result that the asset is verified.

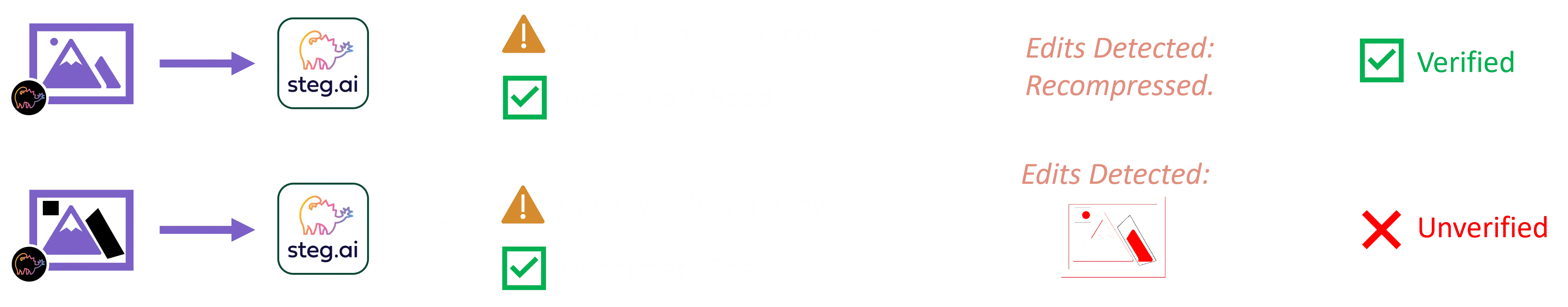

Scenario 2. The next figure outlines a flow of a second scenario. In this case, a digital asset that is missing the content credential has been given to Steg AI to authenticate. There are two possible scenarios shown by the two rows. For both scenarios, the watermark is successfully read and the C2PA content credential is recovered from the remote URL stored in the watermark. In the top row scenario, analyzing the asset uncovers that it has gone through a recompression, but since this is an allowable edit for this particular customer, Steg AI returns that the asset is verified. However, in the bottom row scenario, significant edits to the content have been detected during analysis. Thus, Steg AI returns an unverified result.

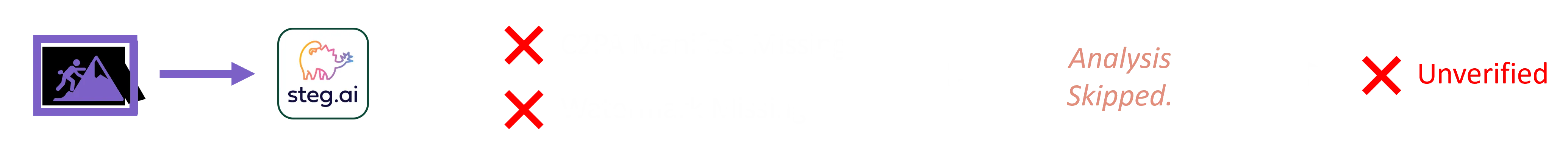

Scenario 3. The final figure below outlines a third scenario where the asset has been significantly edited. Perhaps this is a deepfake alteration of an originally watermarked asset. In this case, because the edits were severe, both the C2PA content credential and watermark are missing. Thus, Steg AI returns an unverified result for this asset.