California took a major step toward greater AI transparency in September 2025 with the signing of Assembly Bill 853 by Governor Gavin Newsom. Also known as the California AI Transparency Act, AB 853 requires companies that build, host, or distribute generative AI systems to meet new transparency and disclosure obligations. Under the law, such companies must embed provenance information directly into AI-generated content and must also provide free, publicly accessible tools that allow users to detect and verify this embedded data. Together, these measures aim to promote accountability, traceability, and user trust in AI-generated media.

Failure to comply with AB 523’s requirements will carry monetary consequences. Companies that do not meet the transparency and disclosure obligations outlined in the law will be subject to daily civil penalties, with fines accruing for each day of noncompliance. These penalties are designed to ensure that developers and distributors of Generative AI systems treat provenance disclosure as a core responsibility of operating in California.

California is a trailblazer in defining disclosure requirements for Generative AI systems, and it will not be the last. The European Union’s AI Act, set to take effect in 2026, introduces similar requirements for transparency. Together, AB 853 and the EU AI Act represent a global shift towards AI accountability and provenance standards. These measures will strengthen user trust, advance consumer protection, and increase the integrity of digital information.

What is Assembly Bill 853, or the AI Transparency Act?

Assembly Bill 853, also known as the AI Transparency Act, is a California law that requires companies to increase transparency in their AI content generation and modification by January 1, 2027. It applies to covered providers, meaning companies that create or produce generative AI systems that are publicly available in California and have more than one million monthly users. This includes large-scale social media platforms, generative AI providers, and media marketplaces.

Example of image generated using ChatGPT

Example of image generated using ChatGPT Example of image manipulated using ChatGPT

Example of image manipulated using ChatGPTGenerative AI systems are technologies that are capable of creating new content such as text, images, video, audio, and code, based on patterns learned from large data sets. These systems power many of today’s most well-known products, like ChatGPT, Adobe Firefly, or Synthesia. These tools have transformed how media is created, personalized, and distributed while also introducing new challenges around authenticity, attribution, and content manipulation.

| Category | Companies | How they use Generative AI |

|---|---|---|

| Social Media Platforms | Meta (Facebook, Instagram); TikTok; YouTube; X (Twitter) | Use Generative AI to recommend, edit, and create user content through filters, effects, and personalized media tools. |

| Generative AI Providers | OpenAI; Character.AI; Anthropic; Stability AI | Develop large-scale AI models that generate images and videos available to the public. |

| Media Marketplaces | Shutterstock; Getty Images; Adobe Stock; Pond5 | Host, distribute, and generate AI-assisted or AI-created photos, videos, and creative assets. |

Under AB 853, these providers must offer a free AI detection tool that allows users to determine whether content was generated or altered by the provider’s system, and display provenance data, if present. Companies must also ensure that content generated or substantially altered by their products includes a latent disclosure, which can take the form of forensic watermarks. In addition, they are required to give users the option of a manifest disclosure, or a clear and visible watermark showing that the content is AI-generated.

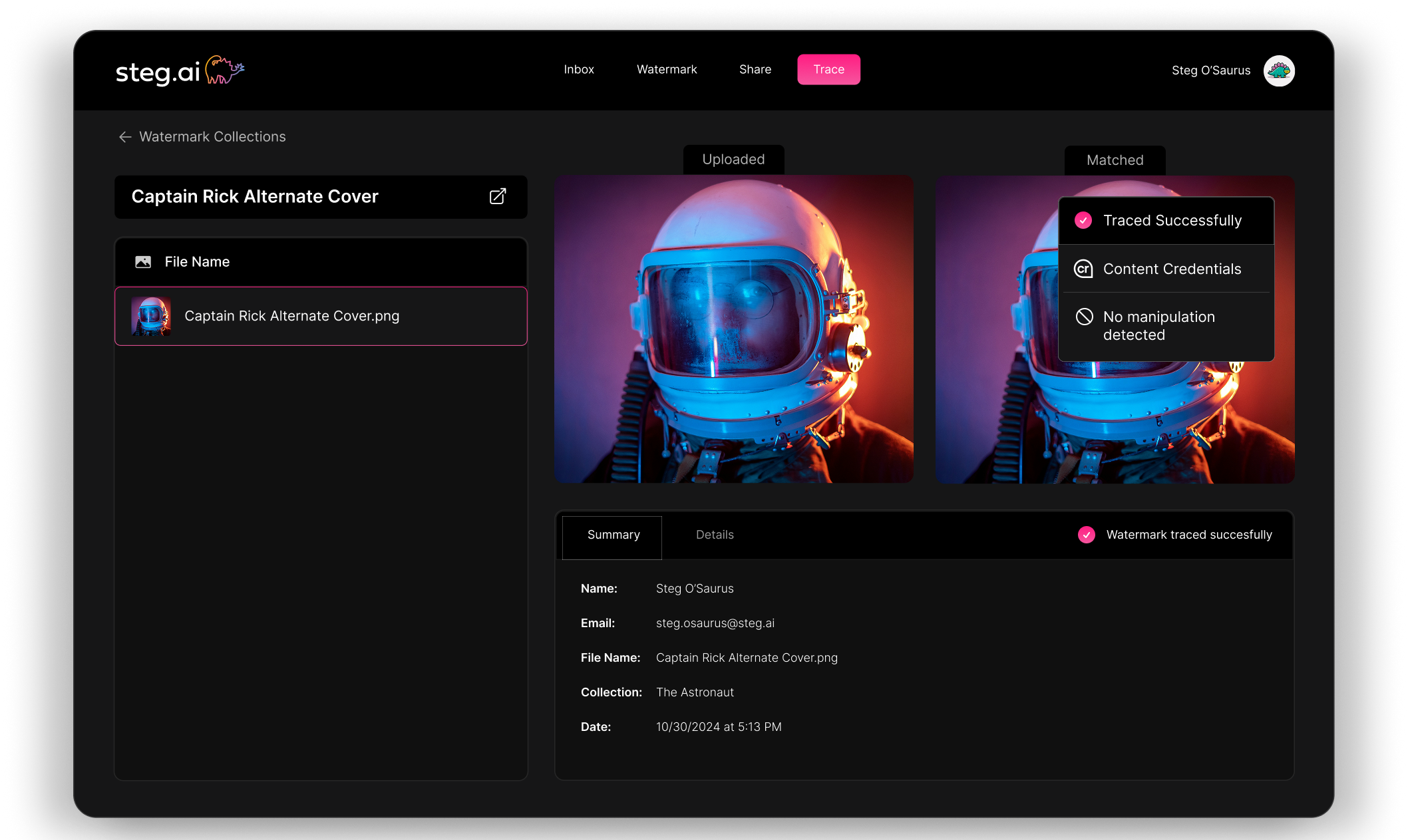

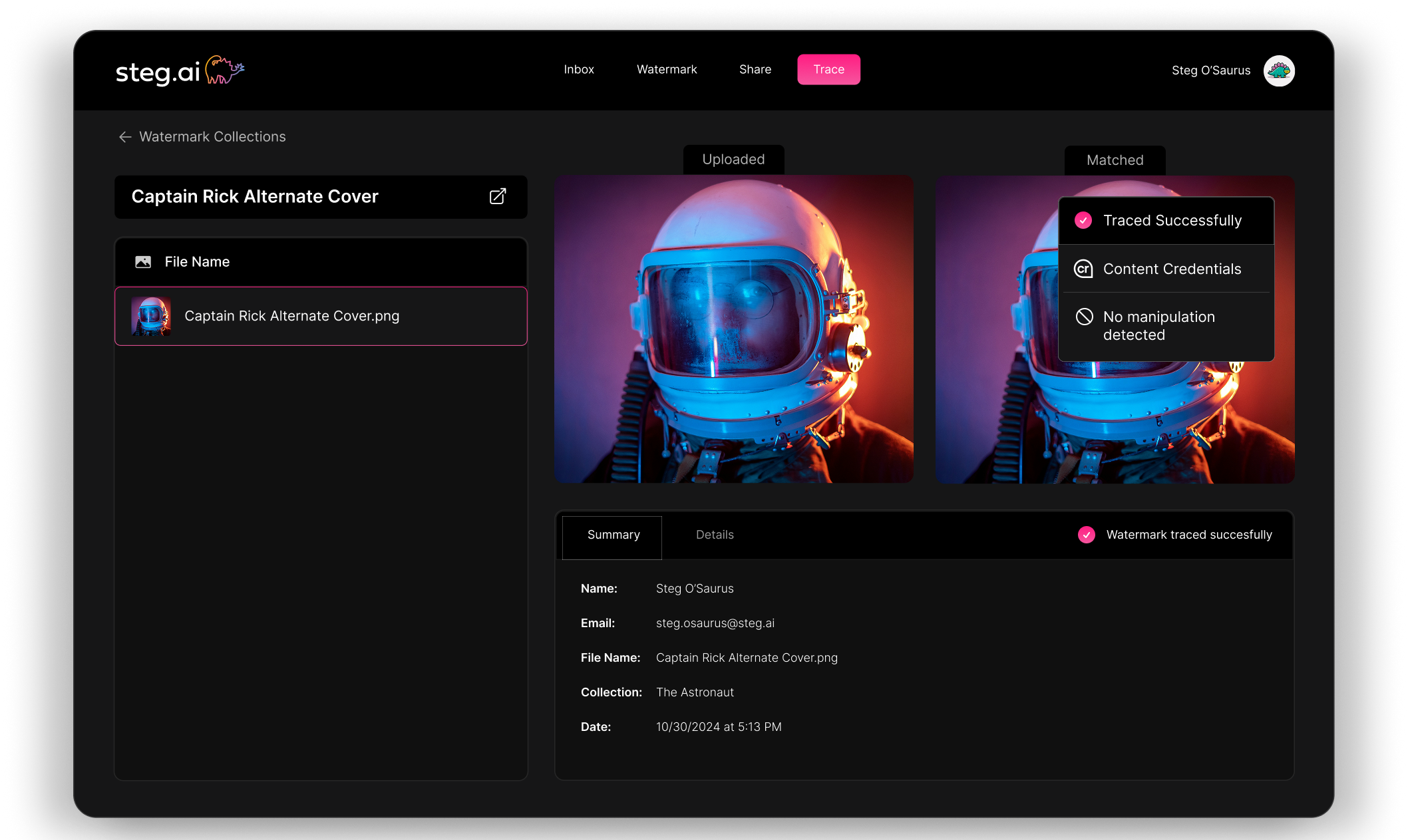

Steg.AI’s web-based AI detection tool

Steg.AI’s web-based AI detection toolThis law further stipulates that platforms cannot strip or remove provenance or latent disclosures, meaning these disclosures must be permanent or extraordinarily difficult to remove. It also prohibits the development or use of tools and services whose primary purpose is to remove or bypass these disclosures.

Non-compliance with AB 853 results in civil penalties of $5000 per day per violation. Violations could mean that companies did not have required disclosures, did not offer a free detection tool, or removed disclosures from existing content. An argument could also be made that violations are for each piece of content that is created by a company.

Steg.AI directly supports compliance with these obligations. Its forensic watermarking technology embeds imperceptible yet robust identifiers into media files, enabling latent disclosure that persists even after compression, cropping, or format conversion. Steg’s detection platform, which can be deployed as a publicly accessible verification tool, meets the bill’s requirement for a free detection mechanism. Additionally, Steg’s system supports manifest disclosures through optional visible watermarks, allowing companies to clearly label AI-generated content.

By integrating Steg.AI’s watermarking and detection capabilities, providers can satisfy both latent and manifest disclosure mandates under AB 853, achieving regulatory compliance, consumer transparency, and stronger public trust.

How Steg.AI’s Digital Watermarking Helps with AB 853 Requirements

This is where digital watermarking becomes crucial for provenance. Here’s how a company using Steg.AI can comply with AB 853’s requirements and avoid daily civil penalties:

Latent disclosures & embedding provenance metadata

Steg.AI invisibly embeds information into content using forensic watermarking. Embedded information can include the creator, device, and AI system used without degrading the quality, aligning with “latent disclosure” requirements.

Manifest disclosures & visible labeling

Steg.AI can visibly watermark content, which could include a visible badge or tag. Forensic watermarks paired with the visible watermarking provide a layered disclosure that allows the detection tool to verify origin while visibly providing upfront notice.

Permanence or hard-to-remove disclosures

Steg.AI’s watermarks, developed with Light Field Messaging technology, survive common transformations such as recompression, scaling, and format changes while remaining detectable. This supports the “extraordinarily difficult to remove” standard.

Detection tools for provenance & verifying source

Steg.AI includes detection tools that can extract embedded watermarks and prove origin/provenance, meeting detection tool obligations.

Conclusion

The enactment of California AB 853 signals the end of AI-generated content circulating without context, or at least the beginning of a significant compliance risk for doing so. For companies that generate, distribute, or host AI content, embedding provenance and disclosures will soon shift from a “nice to have” to a legal requirement.

The law takes effect on January 1, 2027, when companies will face immediate civil penalties for non-compliance. Organizations that begin integrating provenance technologies now will be better positioned to meet this deadline and avoid hasty retrofits after the fact.

A common question for this law is whether adopting C2PA (Coalition for Content Provenance and Authenticity) metadata standards alone will be sufficient for compliance. While C2PA provides a strong foundation for embedding and verifying provenance data, it can easily be stripped as it’s an attachment to the image or video. C2PA implementation should be paired with watermarking to maintain the information after metadata removal.

Steg.AI provides one of the simplest paths to compliance: embedding metadata, enabling detection, and ensuring disclosures are robust and verifiable. Companies that act early and deploy forensic watermarking will not only reduce regulatory risk but also strengthen user trust, content authenticity, and brand integrity in an increasingly AI-driven media landscape.